Research Projects

Rapid Navigation in Diverse Environments

This research innovates techniques for rapid navigation in forests, caves, and other cluttered, unstructured environments.

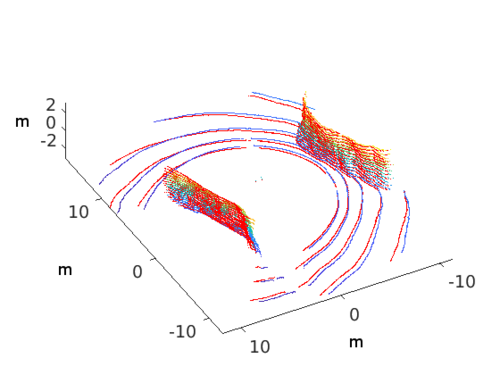

Large-Scale Decentralized Multirobot Active Search

This research project leverages reinforcement learning to enable decentralized multirobot active search over large scales.

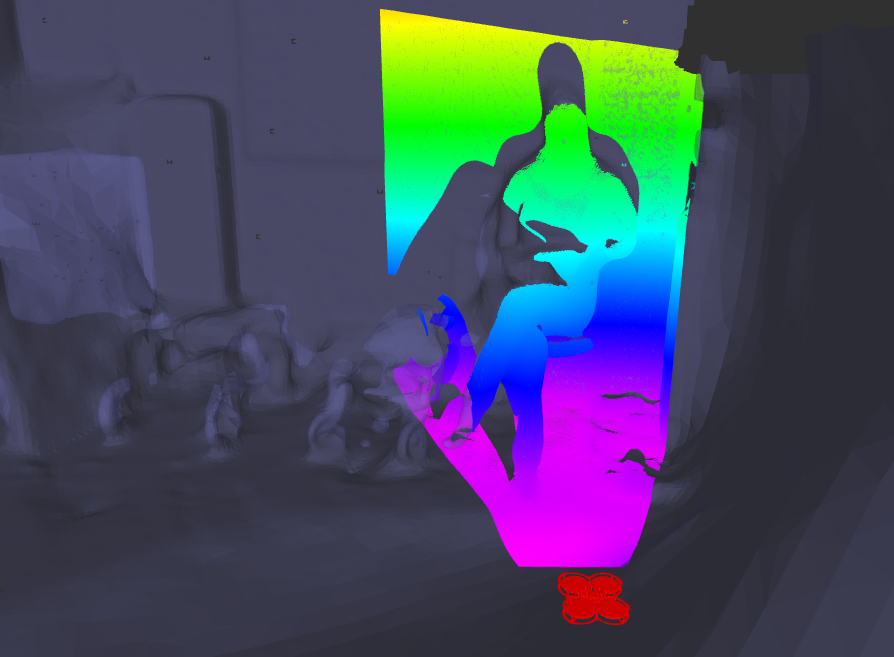

Collaborative Human-Robot Exploration via Implicit Coordination

This research seeks to enable human-robot collaborative exploration of a-priori unknown enviornments using implicit coordination.

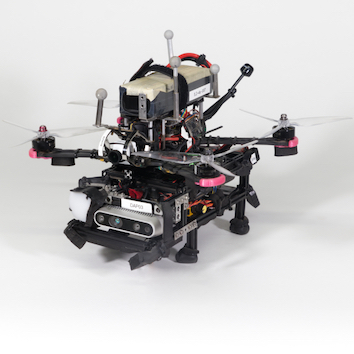

Scalable and Adaptive Aerial Robotic Exploration

This research project develops mapping and planning methods for multirotors to enable memory-efficient exploration while generating a high-fidelity map of the environment.

Assistive Adaptive-Speed Multirotor Teleoperation

This research project seeks to improve assistance to humans operating multirotors through narrow gaps and tunnels.

Autonomous Cave Surveying

This research program develops a method for cave surveying in complete darkness with an autonomous aerial vehicle equipped with a depth camera for mapping, downward-facing camera for state estimation, and forward and downward lights.

Variable Resolution Occupancy Mapping using Gaussian Mixture Models

This research presents a method of deriving occupancy at varying resolution by sampling from a distribution and raytracing to the camera position.

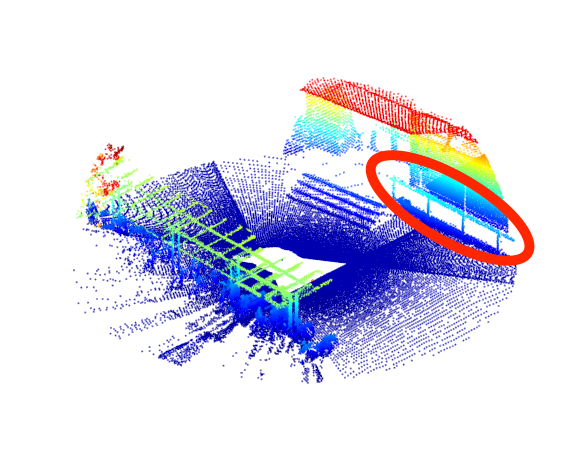

On-Manifold GMM Registration

This research develops a method to determine position and orientation from successive depth or LiDAR sensor observations. The method represents the sensor observations as approximate continuous belief distributions. The results demonstrate superior results as compared to the state of the art.

Efficient Multi-Sensor Exploration using Dependent Observations and Conditional Mutual Information

This research develops a method to leverage conditionally dependent sensor observations for multi-modal exploration.