Autonomous Cave Surveying

This research program develops a method for cave surveying in total darkness using an autonomous aerial vehicle equipped with a depth camera for mapping, downward-facing camera for state estimation, and forward and downward lights. Traditional methods of cave surveying are labor-intensive and dangerous due to the risk of hypothermia when collecting data over extended periods of time in cold and damp environments, the risk of injury when operating in darkness in rocky or muddy environments, and the potential structural instability of the subterranean environment. Although these dangers can be mitigated by deploying robots to map dangerous passages and voids, real-time feedback is often needed to operate robots safely and efficiently. Few state-of-the-art, high-resolution perceptual modeling techniques attempt to reduce their high bandwidth requirements to work well with low bandwidth communication channels. To bridge this gap in the state of the art, this work compactly represents sensor observations as Gaussian mixture models and maintains a local occupancy grid map for a motion planner that greedily maximizes an information-theoretic objective function. The approach accommodates both limited field of view depth cameras and larger field of view LiDAR sensors and is extensively evaluated in long duration simulations on an embedded PC. An aerial system is leveraged to demonstrate the repeatability of the approach in a flight arena as well as the effects of communication dropouts. Finally, the system is deployed in Laurel Caverns, a commercially owned and operated cave in southwestern Pennsylvania, USA, and a wild cave in West Virginia, USA.

Videos

Exploration of a West Virginia Cave with an Autonomous Aerial Robot

People

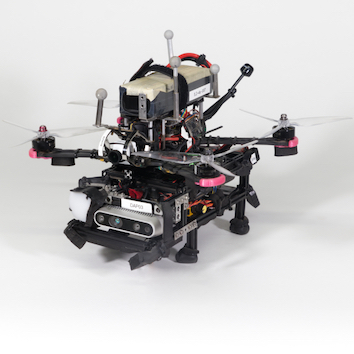

Robots

Rocky

Sponsors